Overview

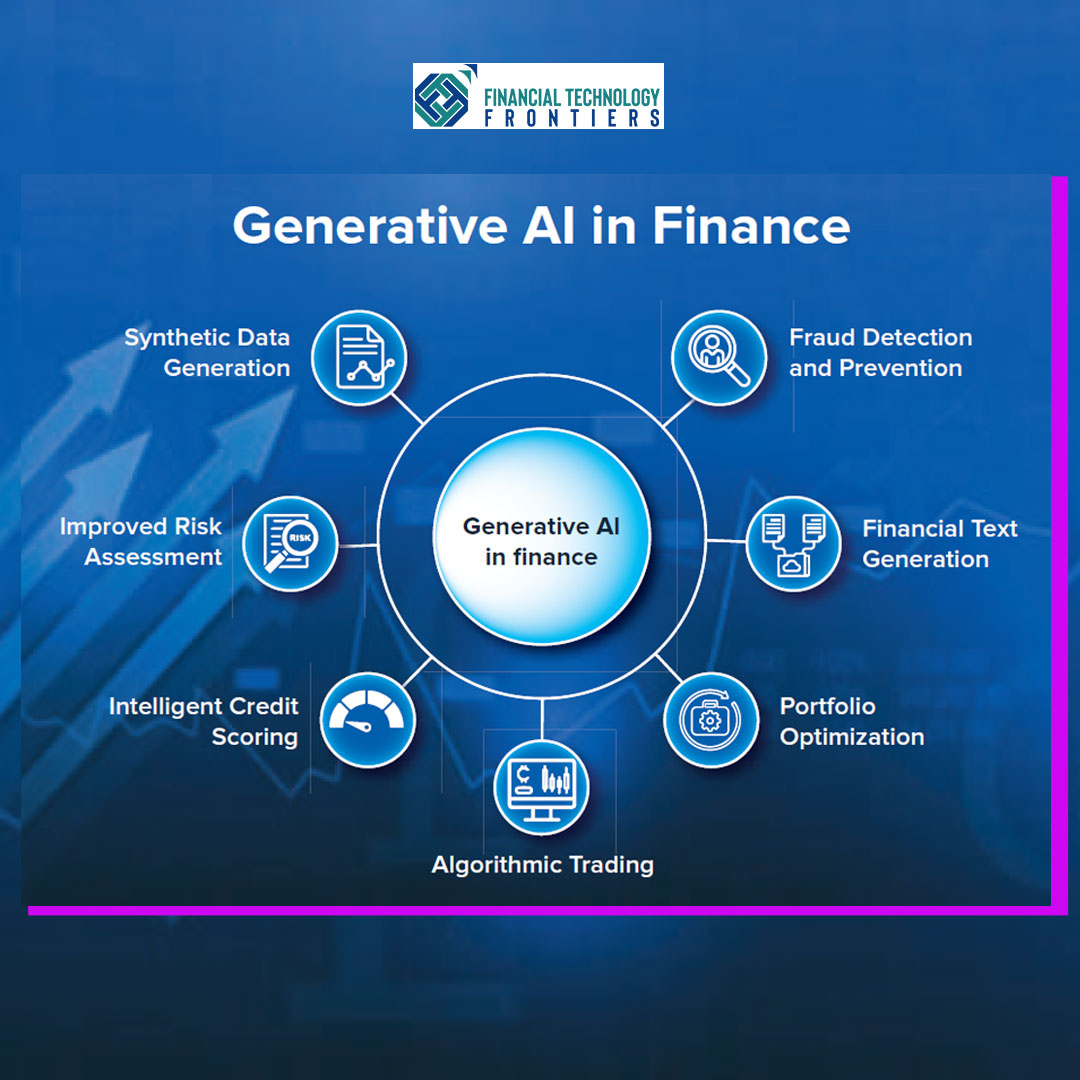

Artificial Intelligence (AI) is embedded deeply in financial services—from credit scoring and fraud detection to AML screening, customer service automation, and algorithmic trading. While these systems unlock significant business value, they also amplify two intertwined risk vectors: data privacy and security.

At the heart of these risks lie AI Language Models (LMs) that consume massive datasets from structured and unstructured information, financial transactions, customer interactions, scanned documents, biometrics and other types of data. The breadth of this data introduces unprecedented vulnerabilities in AI systems that can compromise trust, compliance, and business continuity. They are also great influencers of bias and unethical behaviors of AI systems.

interactions, scanned documents, biometrics and other types of data. The breadth of this data introduces unprecedented vulnerabilities in AI systems that can compromise trust, compliance, and business continuity. They are also great influencers of bias and unethical behaviors of AI systems.

This Article explores key privacy and security risks and their business impacts. On the control side, this Article examines AI risk mitigation strategies by mapping controls from the NIST AI Risk Management Framework (NIST AI RMF) and SOC 2 Trust Services Criteria (SOC TSC). It also outlines a Responsible, Accountable, Consulted and Informed (RACI) governance model, cites real-world incidents, and concludes with a What Next roadmap for Financial Institutions (FIs).

About This Article

This article offers Financial Technology Frontiers (FTF)’s analysis from a control implementor’s perspective of AI induced Data Privacy and Security risks and addresses these risks by leveraging the NIST AI RMF and SOC TSC. It maps the applicable controls of the NIST AI RMF Core and SOC TSC to these risks to provide the practitioner with a ready–to–use approach to address them.

This Article is written in an accessible format to raise awareness about the perils of AI. FTF believes that financial service providers such as banks, mortgage companies, credit card issuers, insurance companies, etc., Fintech entities, consulting firms and technology companies can benefit from this article and ponder over their respective approaches to AI Risk Management. Practitioners from other industries are welcome to adopt this Article to suit their context.

The Risk of Data in AI Models

AI models ingest a wide spectrum of data including but not limited to:

- Structured transaction histories.

- Credit scores, Account balances and other financial information.

- Semi-structured inputs like customer support transcripts and email logs.

- Unstructured data such as images (e.g. scanned documents), voice recordings, and behavioral signals.

- Spatial information (e.g., maps).

- Human identifying attributes such as voice, biometrics, facial recognition, etc.

In many FIs, these data streams come from multiple financial systems that are used for their respective business functions (e.g., loan origination, payments, customer experience). They also originate from third-party data providers, or even public or web-scraped sources.

In many FIs, these data streams come from multiple financial systems that are used for their respective business functions (e.g., loan origination, payments, customer experience). They also originate from third-party data providers, or even public or web-scraped sources.

It is no surprise that the quality of data varies. Legacy systems may have missing records, inconsistent formats, or conflicting values. Data history may also be unclear. For example, whether a dataset has been audited, its lineage tracked, or if it has been manipulated or enriched may be unknown. Data manipulation including feature engineering, use of synthetic data and data anonymization introduces further risk when these are not well governed. Consequently, data integrity is critical. If data has been corrupted or poisoned, tampered with, or altered intentionally or unintentionally, model outputs become unreliable. FIs cannot afford decisions based on corrupted data. Illustrations include risk models that throw out unreliable credit risk scores, fail fraud detection measures, and generate inaccurate regulatory reporting information. These issues need strong integrity controls such as checksums, hashes, versioning, secure data transport and encryption protocols, and principled access controls to ensure data that enters the model is authentic and has not been tampered with.

Thus, the richness of data that powers AI systems also multiplies the risk surface, making disciplined data quality, integrity and prudent data management practices indispensable.

Causes / Contributing Factors

AI amplifies traditional data and privacy weaknesses, creating high-risk pressure points that are often underestimated in Fis.

- Fragmented Legacy Systems & Silos: Many Fis suffer from data trapped across older core banking systems, regional branches, or siloed customer service practices. Over 57% of banking executives report lacking a unified view of customer data, which causes AI models to see only parts of reality and produce flawed outputs.

- Dependency on Third-Party Vendors & Data Providers: Fis often use external data sources for customer profiling, credit risk, fraud scoring, or Know Your Customer

(KYC). Vendor breaches are widespread. In 2024, 97% of leading U.S. banks reported third-party data breaches, indicating that vendor ecosystems are major risk channels for Fis.

(KYC). Vendor breaches are widespread. In 2024, 97% of leading U.S. banks reported third-party data breaches, indicating that vendor ecosystems are major risk channels for Fis. - Inconsistent Data Quality & Governance: Given the various data points Fis manage, they face challenges in robust data classification, data cleansing, and data lineage tracking. These result in inconsistencies, duplication, or biased samples of data. As this information propagates across the institution, it carries these issues.

- Weak Encryption & Access Controls: it is well known that globally, financial data is a prime target for cyber attackers. Technology systems lacking strong encryption (at rest and in transit) and / or with over-privileged and / or uncontrolled access are vulnerable to both insider threats and external attackers.

- Lack of Continuous Monitoring: Many Fis set up initial security or privacy controls but do not monitor outputs, data drift, or vendor compliance in real time. Lack of continuous monitoring for data drift, unauthorized data access, vendor compliance, or model misuse will only lead to accumulation of risks. For example, if a third-party model provider’s security posture degrades, or if a vendor’s data pipeline introduces biased or corrupted records, those issues may go unnoticed. An ongoing auditing or real-time monitoring will help check this exposure.

Lack of continuous monitoring could also lead to regulatory penalties for non-compliance. In 2020, the Office of the Comptroller of the Currency (OCC) penalized a US Bank $60 Million due to vendor management control deficiencies. This included the bank’s inability to exercise proper oversight into its information security controls while decommissioning its wealth management business data centers and its failure to adequately monitor the performance and compliance of its vendors. In this instance, data once considered safe (on decommissioned hardware) remained exposed because there were no checks to confirm proper disposal or data sanitization by the vendors. In addition, vendor obligations were not enforced in real time or near real time, although vendor contracts existed. Continuous oversight, monitoring, and auditing were however missing.

These factors lead to several privacy and security risks in Fis.

Data privacy risks of AI in Fis

- Unauthorized Data Collection & Processing. This risk relates to an AI system over-collecting PII (e.g., biometrics, behavioral data) without explicit consent. Further, AI systems are known to scrape or inherit third-party datasets that lack clear origin and / or ownership. This act by an AI system renders the FI violate privacy laws and regulatory rules.

- Data Leakage through AI Outputs. This risk relates to an AI system exposing sensitive and / or confidential records via model hallucinations, especially when its LMs are trained on sensitive financial, personal and health data.

- Poor Data Anonymization & Reverse Engineering. This risk relates to an AI system using weak anonymization or synthetic data techniques to mask its data. This weakness could enable reverse engineering of real personal identities.

- Vendor & Third-Party Data Sharing. This risk relates to a vendor-provided and / or vendor-managed AI system’s behavioral traits, when data is entrusted to the Vendor for storage and processing. Typical players include cloud service providers, Fintechs, credit rating agencies, identity providers, fraud detection companies and others. This risk increases when these entities are exposed to data breaches.

- Cross-Border Payments, Data Transfers & Jurisdictional Compliance Gaps. This risk relates to an AI system that moves data across jurisdictions without proper risk management and compliance with applicable laws and local requirements (e.g., GDPR, CCPA, OSFI).

Security Risks of AI in FIs

- Data Poisoning & Model Manipulation. This risk relates to malicious actors inserting corrupted training data that skews outputs like fraud detection or risk scoring in an AI system.

Adversarial Attacks on Models. This risk relates to attackers crafting spurious inputs (e.g., adversarial credit information) that mislead the output generated by an AI system.

Adversarial Attacks on Models. This risk relates to attackers crafting spurious inputs (e.g., adversarial credit information) that mislead the output generated by an AI system.- Weak Encryption & Access Controls. This risk relates to cyber criminals gaining unauthorized access to a FI’s data in an AI system, both at rest and in transit. The risk is compounded with insider threats.

- Third-Party & Supply Chain Compromise. This risk relates to a vendor-provided and / or vendor-managed AI system’s behavioral traits, dependency on external APIs, externally pretrained models and externally managed cloud ecosystems that are inflicted with their own vulnerabilities.

- Inadequate Monitoring & Incident Response. This risk relates to lack of or inadequate real-time monitoring of an AI system for abnormalities like model drift, abnormal API calls, undetected intrusions and vendor breaches.

Case Studies

A couple of real–world incidents demonstrate the seriousness of privacy and security risks involving AI systems and their impact.

- Biometric / Privacy Incident involving Clearview AI (2020–2021):

In 2022, a lawsuit alleged Clearview AI to have scraped billions of facial images without consent and used those images to build a biometric identification database. In 2025, a U.S. judge approved the class-action settlement over privacy claims, where Clearview was accused of violating biometric privacy laws.

- Security & Confidentiality Incident involving Samsung (2023):

In 2023, electronics major, Samsung, experienced a major proprietary data leak incident. Reports indicate multiple instances of engineers having fed proprietary code and hardware information into ChatGPT. This sparked warnings at the highest levels in the Company. Consequently, Samsung banned its employees from using ChatGPT.

In 2023, electronics major, Samsung, experienced a major proprietary data leak incident. Reports indicate multiple instances of engineers having fed proprietary code and hardware information into ChatGPT. This sparked warnings at the highest levels in the Company. Consequently, Samsung banned its employees from using ChatGPT.

As evident, both instances are primarily the results of people and process deficiencies surrounding the use of AI systems. These incidents echo the need to have strong governance and process controls over the use of AI systems.

Business Impact of Privacy & Security Risks

As with traditional technologies, risks in AI systems have multiple business impacts. These include:

Financial losses in the form of direct theft of data, fraud, and regulatory penalties.

Financial losses in the form of direct theft of data, fraud, and regulatory penalties.

- Operational disruption in the form of downtime in core financial systems due to AI data corruption or cyberattacks.

- Reputational damage in the form of breaches leading to customer distrust and damage to brand credibility.

- Regulatory Non-Compliance in the form of hefty penalties for violations of privacy and security standards and requirements.

- Market instability and erosion of trust in the form of mispricing or flawed credit scoring in AI systems. This may in turn impact on broader financial stability.

In summary, as AI models ingest sensitive financial and personal data, unchecked risks can quickly escalate into regulatory penalties, reputational harm, or systemic vulnerabilities.

Mitigation Measures

Effective mitigation measures are essential to ensure that AI-driven innovation in FIs does not come at the expense of privacy, security, or trust. Help is available in the form of  frameworks such as the NIST AI RMF and SOC TSC. These frameworks provide structured, outcome-driven approaches to proactively manage AI risks. By embedding these measures across people, governance, processes, and technology, FIs can balance innovation with risk and accountability. Given below are a few controls from these frameworks to address risks in using AI systems.

frameworks such as the NIST AI RMF and SOC TSC. These frameworks provide structured, outcome-driven approaches to proactively manage AI risks. By embedding these measures across people, governance, processes, and technology, FIs can balance innovation with risk and accountability. Given below are a few controls from these frameworks to address risks in using AI systems.

| Domain | Risk | NIST AI RMF | SOC TSC |

|---|---|---|---|

| Privacy | Unauthorized Collection & Processing | MAP 1.1: Define lawful AI data contexts. | CC3.1: Risk assessment clarity in objectives. |

| Data Leakage via AI Outputs | MANAGE 4.1: Post-deployment monitoring & override plans. | CC7.3: Security event evaluation & containment. | |

| Poor Anonymization | MEASURE 2: Test trustworthiness. | CC8.1: Privacy by Design: Limit unnecessary personal data collection | |

| Security | Data Poisoning | MAP 4.2: Document risk controls for third-party data | CC9.2: Assess & manage vendor risks. |

| Adversarial Attacks | MEASURE 3: Continuous monitoring. | CC7.4: Incident response execution. | |

| Weak Encryption | GOVERN 1.2: Embed trustworthy AI characteristics in organizational policies. | CC6.1: Use Encryption to Protect Data. |

Roles and Responsibilities to manage Privacy and Security risks in AI systems

Managing privacy and security risks in AI systems requires clear ownership and accountability across the FI. A well-structured Responsible, Accountable, Consulted, and Informed (RACI) model ensures that responsibilities are not only assigned but also executed with balancing compliance, security, ethics, and business impact. By defining who plays key roles following the RACI model, financial institutions can embed risk governance and management into daily operations, reduce blind spots, and align AI risk management with both regulatory obligations and strategic goals. Clarity of roles also strengthens trust, enhances resilience, and ensures AI systems are deployed safely and responsibly. Given below is a high–level RACI model for managing privacy and security risks in AI systems.

Managing privacy and security risks in AI systems requires clear ownership and accountability across the FI. A well-structured Responsible, Accountable, Consulted, and Informed (RACI) model ensures that responsibilities are not only assigned but also executed with balancing compliance, security, ethics, and business impact. By defining who plays key roles following the RACI model, financial institutions can embed risk governance and management into daily operations, reduce blind spots, and align AI risk management with both regulatory obligations and strategic goals. Clarity of roles also strengthens trust, enhances resilience, and ensures AI systems are deployed safely and responsibly. Given below is a high–level RACI model for managing privacy and security risks in AI systems.

| Role | Responsible | Accountable | Consulted | Informed |

|---|---|---|---|---|

| CISO / Head of Cybersecurity | Security controls, encryption, monitoring. | |||

| Chief Data Officer (CDO) | Privacy, data lineage, anonymization. | |||

| Chief Risk Officer (CRO) | AI risk alignment with enterprise risk appetite. | |||

| Legal & Compliance Officers | Regulatory mapping (GDPR, OSFI, OCC, etc.). | |||

| Data Ethics Committee | Fairness & bias checks. | |||

| Board of Directors | Oversight of AI risk. | |||

| Business Leaders | Affected parties of AI outputs. |

The Way Ahead

As FIs expand their use of AI, maturity in their privacy and security practices become significant defining factors for organizational resilience and trust. This requires embedding governance, strengthening vendor oversight and continuously monitoring dynamic risks in AI systems. A few takeaways include the following:

- Embedding governance through development of AI risk focused policies that enable institutionalizing an AI risk culture.

- Enhancing vendor oversight practices through rigorous and cyclic vendor risk assessments.

- Adopting continuous monitoring techniques to address the dynamic and ever-changing AI risk landscape.

- Investing in privacy by design principles and supporting technologies.

- Enhancing board-level accountability through mandatory AI risk oversight to measure progress against defined KPIs.

By investing into these Privacy and Security risk measures and ensuring board-level accountability, FIs can transform their risk and compliance activities into competitive advantage and safeguard against AI threats.

About Financial Technology Frontiers

Financial Technology Frontiers (FTF) is a global media–led fintech platform dedicated to building and nurturing innovation ecosystems. We bring together thought leaders, financial institutions, fintech disruptors, and technology pioneers to drive meaningful change in the financial services industry.

All sources are hyperlinked.